Squashed Expectations: The Intriguing Saga of Classifying Produce with Machine Learning

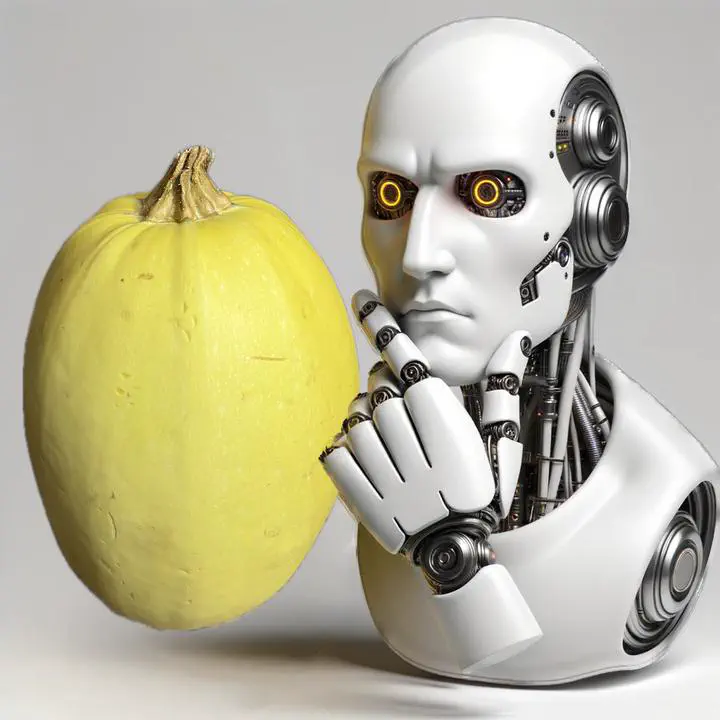

After diving back into the Practical Deep Learning for Coders course, I decided to take on a new challenge: crafting an image classifier for the elusive spaghetti squash. Why spaghetti squash? Picture this: you place an order for spaghetti squash, but the universe plays a cruel joke and instead, you get its culinary doppelgänger–a honeydew melon. Honeydew and meatballs, anyone?

Photo by Eric Prouzet, via Unsplash

Photo by Daniel Dan, via Unsplash

Embracing a dash of chaos, I threw butternut squashes into the machine learning mix as a wild card.

Building this model turned out to be anything but straightforward. The data cleaning process was a beast. More images came from recipe blogs than actual raw fruits, and my data set was chock full of squash casseroles and melon salads. Imagine training a facial recognition model with Halloween masks; that’s what it felt like.

Desperate times called for desperate measures–enter convnext_base_in22ft1k. According to performance charts from one of Jeremy Howard’s notebooks, ConvNeXt is quite accurate on the ImageNet set with reasonable speed. I thought maybe, just maybe, this more complex model could eke out just a bit more accuracy from the flawed dataset. Boy, was I wrong! The more complex model, rather than learning the traits that distinguish melons from squashes, just memorized the images in its training set. Then when it was tested on the validation set (images it had not seen before), it bombed. This failure to generalize is called “overfiting.”

Here’s the kicker: when I tried to clean my data, the overfitting initially got worse. The “junk” data I discarded–photos of cooked and sliced squash–had features that were easier to generalize than the more challenging raw produce photos. Removing them was akin to pulling a crucial block from a Jenga tower; the model’s performance collapsed.

I retreated to the basics, returned to the trusty resnet18, and did as much stringent data cleaning as I could stand. I found that I could temporarily work around the overfitting by eliminating an epoch from training. Ultimately, I found I could go back to four epochs once I had done enough rigorous data cleaning. Interestingly, the validation loss improved when using convnext with better data, though it still remained somewhat prone to overfitting in this project.

Unlike my previous project, the OS Screenshot Classifier, where labels were readily available and easy to search for, this endeavor presented a more nuanced problem. The key takeaway? There are no shortcuts to get from raw data to clean, labelled data. While my squash/melon classifier won’t soon be winning any Kaggle competitions, it has nevertheless provided a valuable learning experience.

You can try out my classifier below.

I came up with the idea to build this classifier a few days ago, but I was further emboldened after reading a recent post from Zhengzhong Tu. It seems that GPT-4 has completely cracked the “blueberry muffin vs. chihuahua” quiz from Karen Zack’s Food vs. Animals thread, while sophisticated models struggled with it seven years earlier. While today’s cutting-edge models are undoubtedly impressive, not everyone can afford the computational power they demand. Fortunately, they don’t need to. Within specialized domains that benefit from a narrower focus, a well-trained model can go toe-to-toe with giants like GPT-4, remain competitive, and even emerge victorious, all without breaking the bank.

Computer vision has been solved. pic.twitter.com/km5fYFgwqC

— Zhengzhong Tu (@_vztu) October 13, 2023